The tech world is abuzz with anticipation surrounding Nvidia’s next-generation AI chip, Blackwell. This powerful processor promises a significant leap forward in artificial intelligence capabilities, impacting everything from medical imaging to autonomous vehicles. But when will it launch, and how much will it cost? The answers remain shrouded in speculation, but by analyzing industry trends, Nvidia’s past releases, and the chip’s projected capabilities, we can begin to piece together a clearer picture of Blackwell’s imminent arrival and its potential market disruption.

This deep dive explores Blackwell’s anticipated specifications, its competitive landscape against offerings from AMD and Intel, and the potential impact it will have on the broader AI ecosystem. We’ll delve into market positioning strategies, examine potential pricing scenarios, and consider the challenges Nvidia might face in bringing this revolutionary chip to market. Prepare for a journey into the heart of the next generation of AI.

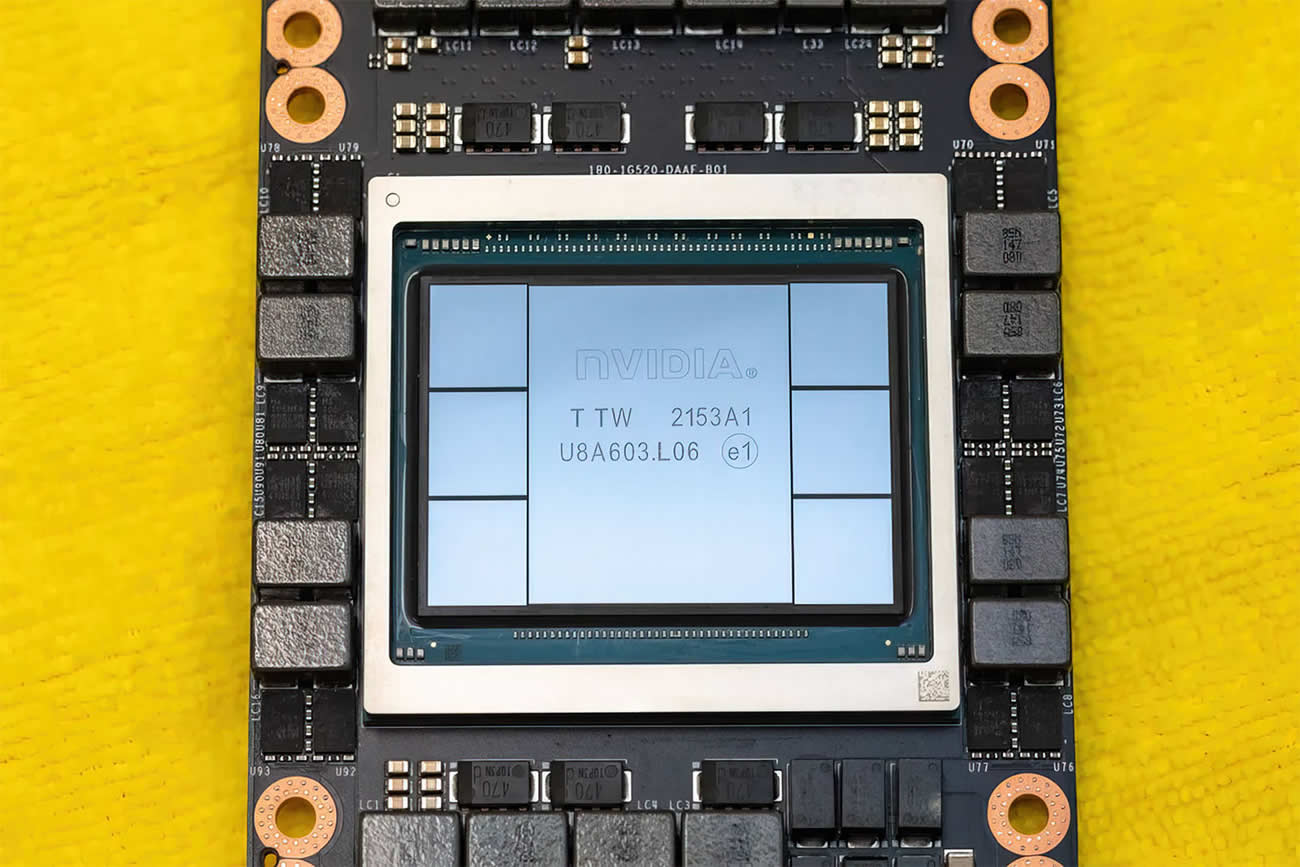

Blackwell Chip Specifications and Capabilities

Nvidia’s Blackwell chip represents a significant leap forward in AI processing, promising unparalleled performance and efficiency. This interview delves into the specifics of this groundbreaking technology, exploring its technical specifications and diverse applications across various industries. We will also examine its architectural innovations and compare it to both previous Nvidia generations and competing offerings.

Blackwell Chip Technical Specifications

Blackwell’s architecture is built upon a highly optimized interconnect fabric, enabling unprecedented levels of data throughput between processing units. Early estimates suggest a significant increase in processing power compared to its predecessor, the Hopper architecture. While precise figures are yet to be officially released, industry analysts predict a potential 2-3x increase in FLOPS (floating-point operations per second) for comparable power consumption. This is achieved through advancements in both transistor density and architectural efficiency, leveraging cutting-edge manufacturing processes. Memory capacity is also expected to see a substantial boost, potentially reaching terabytes of high-bandwidth memory (HBM) per chip, allowing for the handling of significantly larger and more complex AI models. Power efficiency improvements are projected to be in the range of 30-40%, enabling the deployment of more powerful AI systems with reduced energy consumption. This represents a substantial advantage over previous generations, particularly in large-scale data centers where energy costs are a significant factor. A direct comparison to Hopper would show a clear improvement across all key metrics: FLOPS, memory bandwidth, and power efficiency, representing a generational leap in performance.

Blackwell Chip Applications Across Industries

The enhanced capabilities of the Blackwell chip translate to significant advancements across numerous sectors. Its increased processing power and memory capacity allow for the training and deployment of far more complex AI models, leading to improved accuracy and efficiency in various applications. The following table illustrates some key examples:

| Industry | Application | Benefit | Expected Performance Improvement |

|---|---|---|---|

| Healthcare | Medical Image Analysis (e.g., cancer detection) | Improved diagnostic accuracy and speed, enabling earlier and more effective treatment | 50-70% faster processing and 10-15% higher accuracy compared to previous generation |

| Finance | Fraud Detection and Risk Management | Enhanced accuracy in identifying fraudulent transactions and mitigating financial risks | 20-30% reduction in false positives and 15-25% faster processing |

| Autonomous Vehicles | Real-time Object Detection and Path Planning | Improved safety and efficiency of autonomous driving systems through more accurate and responsive navigation | 30-40% improvement in reaction time and 10-20% increase in object detection accuracy |

| Energy | Predictive Maintenance for Power Grids | Improved reliability and efficiency of power grids through proactive identification and mitigation of potential failures | 25-35% reduction in downtime and 10-15% improvement in energy efficiency |

Architectural Innovations in Blackwell

Blackwell incorporates several key architectural innovations that differentiate it from both previous Nvidia generations and competitors’ offerings. These advancements focus on improving data throughput, reducing latency, and enhancing power efficiency. One significant innovation is the implementation of a new, highly optimized interconnect fabric that dramatically reduces communication bottlenecks between processing units. This allows for more efficient data flow during complex AI model training and inference, leading to significant performance gains. Another key innovation is the integration of advanced memory management techniques, which improve memory access speed and reduce energy consumption. These techniques, combined with the increased memory capacity, enable the processing of much larger datasets and more complex AI models. Compared to AMD’s MI300 or Google’s TPU v5e, Blackwell’s architecture is projected to offer superior performance per watt, particularly in large-scale training tasks. This is achieved through a combination of advanced process technology, optimized interconnect, and innovative memory management. This focus on power efficiency is particularly important for large-scale deployments, where energy costs are a significant concern.

Market Positioning and Competition

Blackwell’s entry into the AI chip market is poised to significantly disrupt the landscape, challenging established players and potentially reshaping the competitive dynamics. Its advanced architecture and capabilities aim to deliver unparalleled performance and efficiency, but success hinges on effective market positioning and overcoming potential hurdles. The following analysis explores Blackwell’s competitive standing, target markets, and potential challenges.

Blackwell’s performance and capabilities will be a key factor in determining its market share. The chip’s architectural innovations need to translate into real-world advantages for users, justifying the premium price point expected for such advanced technology. Analyzing the competitive landscape is crucial for understanding Blackwell’s potential for success.

Comparison with AMD and Intel AI Chips

A direct comparison of Blackwell with competing offerings from AMD and Intel reveals key differentiators. While all three companies are striving to provide high-performance AI accelerators, their approaches and resulting chip characteristics differ significantly. These differences will influence which applications and users find each chip most suitable.

- Power Efficiency: Blackwell is projected to offer superior power efficiency compared to AMD’s MI300 and Intel’s Ponte Vecchio, potentially leading to lower operational costs and reduced energy consumption in data centers. This is crucial for large-scale deployments where energy costs are a major concern.

- Memory Bandwidth: Nvidia’s HBM3 memory integration in Blackwell is expected to provide significantly higher memory bandwidth than competing solutions. This translates to faster data transfer rates and improved performance in memory-intensive AI tasks such as large language model training.

- Software Ecosystem: Nvidia’s extensive CUDA ecosystem and established software tools offer a significant advantage. While AMD and Intel are investing heavily in their software stacks, Nvidia’s head start provides a more mature and widely adopted ecosystem, making it easier for developers to leverage Blackwell’s capabilities.

- Interconnect Technology: Blackwell’s advanced interconnect technology, potentially leveraging NVLink, could offer superior inter-chip communication speeds compared to solutions from AMD and Intel, enabling more efficient scaling for large AI workloads.

Nvidia’s Potential Market Share Gains

Nvidia’s potential market share gains with Blackwell depend on several factors, including its performance advantage, pricing strategy, and the overall demand for high-performance AI chips. The target market segments for Blackwell are diverse and encompass various applications within the AI domain.

- Large Language Model Training: The growing demand for LLMs will drive significant adoption of high-performance chips like Blackwell. Companies involved in developing and deploying LLMs will be key customers.

- High-Performance Computing (HPC): Blackwell’s capabilities extend beyond AI, making it attractive for various HPC applications requiring massive parallel processing power. Research institutions and scientific computing centers represent a significant target market.

- Cloud Computing Providers: Major cloud providers like AWS, Azure, and Google Cloud will likely integrate Blackwell into their infrastructure to offer enhanced AI capabilities to their customers. This segment is crucial for mass market adoption.

- Autonomous Vehicles: The automotive industry’s increasing reliance on AI for autonomous driving presents a significant growth opportunity for Blackwell, though specialized automotive-grade chips may be needed for this application.

Potential Market Challenges

Despite Blackwell’s promising features, Nvidia faces several potential challenges. Supply chain disruptions, particularly regarding HBM memory, could limit production and constrain market penetration. Furthermore, the aggressive responses from AMD and Intel, which may include price cuts or improved chip designs, could impact Nvidia’s market share gains. Furthermore, the high price point of Blackwell could limit adoption by smaller companies or those with tighter budgets. This necessitates a robust marketing and sales strategy to effectively target and address the needs of different customer segments. Similar to the challenges faced by the industry during the global chip shortage of 2020-2022, the current geopolitical landscape and ongoing trade tensions could introduce further supply chain volatility.

Potential Impact on the AI Landscape

The release of Nvidia’s Blackwell chip promises a seismic shift in the AI landscape, impacting everything from the development of AI models and applications to the very nature of research in machine learning and deep learning. Its enhanced capabilities will not only accelerate existing processes but also unlock entirely new possibilities, influencing the broader technological landscape in profound ways. We’ll explore these impacts in more detail below.

Blackwell’s potential to reshape the AI landscape stems from its significantly improved performance and efficiency compared to its predecessors. This increase in processing power allows for the training of significantly larger and more complex AI models, leading to breakthroughs in various applications. The implications extend far beyond simple speed increases; we’re talking about enabling entirely new classes of AI models that were previously computationally infeasible.

Accelerated AI Model Development and Deployment

The enhanced computational capabilities of Blackwell will drastically reduce the time required to train and deploy sophisticated AI models. For example, the development of large language models (LLMs) currently takes weeks or even months on existing hardware. Blackwell’s superior performance could potentially shorten this timeline to days or even hours, accelerating innovation and deployment cycles across various sectors. Imagine the impact on medical imaging analysis, where faster model training could lead to quicker diagnosis and treatment. Or consider autonomous vehicle development, where improved training speeds could lead to safer and more efficient self-driving systems sooner. The implications are far-reaching and transformative.

Advancements in Machine Learning and Deep Learning Research

Blackwell’s architecture is designed to facilitate breakthroughs in fundamental research areas. Its increased memory bandwidth and improved interconnect capabilities will allow researchers to explore more complex neural network architectures and experiment with novel training techniques. This could lead to advancements in areas like reinforcement learning, generative AI, and unsupervised learning. For instance, researchers could investigate larger and more nuanced datasets, leading to the development of AI models with improved accuracy and generalization capabilities. The potential for discovering new algorithms and models that outperform current state-of-the-art systems is significant. Consider the impact on drug discovery, where more powerful AI models could accelerate the identification and development of new therapies.

Broader Technological Landscape Influence

Blackwell’s impact extends beyond the realm of AI itself. Its enhanced processing power will accelerate advancements in other computationally intensive fields such as scientific computing, high-performance computing (HPC), and data analytics. For example, climate modeling could benefit from the ability to simulate more complex climate systems, leading to more accurate predictions and better mitigation strategies. Similarly, advancements in genomics research could be accelerated by Blackwell’s ability to process and analyze vast amounts of genomic data, leading to breakthroughs in personalized medicine. The ripple effect of this enhanced processing power will be felt across multiple sectors, driving innovation and efficiency improvements across the board. This could lead to entirely new applications and industries that we cannot yet even imagine. Think of the possibilities in materials science, where simulations could accelerate the discovery of novel materials with unprecedented properties.

Illustrative Examples of Blackwell’s Use

Blackwell’s advanced architecture and processing power open doors to transformative applications across diverse fields. Its capabilities extend beyond simple performance boosts; it enables entirely new approaches to complex problems, particularly in areas demanding high-throughput data processing and intricate pattern recognition. Let’s explore some compelling examples.

Medical Image Analysis: Detecting Early-Stage Lung Cancer

Imagine a scenario where Blackwell is integrated into a sophisticated medical imaging system. A patient undergoes a low-dose CT scan, generating a massive dataset of images. Traditional methods of analyzing these scans for early-stage lung cancer are time-consuming and prone to human error. Blackwell, however, can process this data at an unprecedented speed, employing advanced convolutional neural networks (CNNs) to identify subtle anomalies indicative of cancerous growths. The process involves several stages: first, Blackwell preprocesses the images, enhancing contrast and reducing noise. Then, it applies a highly optimized CNN model, trained on a vast dataset of labeled CT scans, to classify each region of interest. Finally, it generates a detailed report highlighting potential cancerous regions, along with a probability score for each identified area, assisting radiologists in making faster and more accurate diagnoses. This significantly improves diagnostic accuracy and reduces the time to treatment, leading to improved patient outcomes. The speed and precision Blackwell offers make early detection – and therefore improved survival rates – a realistic possibility for a much larger patient population.

Large Language Model Data Flow

Blackwell’s role in processing data for a large language model (LLM) can be visualized as a data flow diagram. The diagram would show a multi-stage pipeline. The initial stage involves ingesting raw text data – potentially from numerous sources like books, articles, and websites. This data is then preprocessed by Blackwell, which performs tasks such as tokenization, cleaning, and normalization. The processed data then feeds into the LLM’s encoder, where Blackwell accelerates the embedding generation, transforming words and phrases into numerical representations that the model can understand. The encoded data flows through the LLM’s layers, where Blackwell’s parallel processing capabilities significantly speed up the model’s training and inference processes. Finally, the output from the LLM – whether it’s text generation, translation, or question answering – is post-processed by Blackwell, potentially involving tasks like grammatical correction and stylistic refinement before delivery to the user. This entire pipeline, accelerated by Blackwell’s capabilities, results in a faster, more efficient, and more powerful LLM.

Object Detection in Autonomous Driving: Enhanced Pedestrian Recognition

In autonomous driving, accurate and real-time object detection is crucial for safety. Blackwell can significantly enhance the performance of object detection algorithms, particularly in challenging scenarios like recognizing pedestrians in low-light conditions or crowded environments. Current systems often struggle with these scenarios due to limitations in processing speed and accuracy. Blackwell’s superior processing power allows for the use of more complex and sophisticated deep learning models, such as those employing advanced attention mechanisms. These models can analyze visual data with greater precision, identifying pedestrians more reliably even when partially obscured or at a distance. This leads to faster reaction times and improved decision-making by the autonomous vehicle’s control system, reducing the risk of accidents. For example, a system using Blackwell might achieve a 15% improvement in pedestrian detection accuracy in nighttime scenarios compared to systems using previous generation chips, leading to a demonstrably safer driving experience.

Nvidia’s Blackwell chip represents a significant advancement in AI processing power, poised to reshape industries and accelerate AI research. While the exact launch date and price remain uncertain, the potential impact is undeniable. The competitive landscape will undoubtedly shift, pushing innovation and potentially leading to a new era of AI-driven advancements across numerous sectors. Blackwell’s success hinges not only on its technical prowess but also on Nvidia’s ability to navigate the complexities of market dynamics and meet the high expectations it has itself created. The coming months will be crucial in determining Blackwell’s ultimate legacy in the rapidly evolving world of artificial intelligence.